Intuitive Description

"Just grab a thing and compare the other things with it."The trick is that Quicksort "grabs" and "compares" intelligently, avoiding unnecessary comparisons and allowing it to sort a collection in logarithmic time. Specifically, this is achieved by partitioning the collection around the thing we just grabbed (called the "pivot") into two smaller collections. Everything smaller than or equal to the pivot goes into the left sub-collection, and everything else goes into the right sub-collection. The two sub-collections can then be Quicksort-ed independently, recursively. The recursion terminates when the sub-collections contain less than two elements.

Code and Analysis of Computational Complexity

The code for Quicksort is fairly straightforward:

Most of the work is performed in the partition method, which can be implemented in-place.

The computational complexity of Quicksort depends on the selection of the pivot element. In the best case, the selected pivot is the median of the collection and the partition step divides the collection into two smaller collections of identical size. Since the size of the sorted collection is halved at each step of the recursion, the best case complexity of Quicksort is $O(N \log N)$. In the worst case, the selected pivot is the minimum or maximum of the collection, and the partition step achieves very little. The worst case complexity is $O(N^2)$.

There are several ways to select the pivot element, the simplest being selecting the first, last or middle element of the collection. Since selecting the first or last element can lead to worst-case performance if the array is already sorted, selecting the middle element is the better option of the three.

The effect of pivot selection on the complexity of Quicksort can be observed empirically, by counting the number of comparisons for three different types of input: random, sorted and uniform; and for three different pivot selection methods: first, last and middle. Here are some results (sorting 100 input elements, showing the number of comparisons first, last, middle selection modes, respectively):

- random (mean over 100 runs): 713.28, 715.17, 713.25

- sorted: 1001, 1001, 543

- same: 1001, 1001, 1001

The above results support what is already well-known: merge sort performs worst when given sorted and uniform input. The former can be dealt with by selecting the middle element as the pivot (or even randomizing the pivot selection). The latter can be dealt with by checking for uniform input prior to sorting, which will take O(N).

To obtain these results, I used GDB (to set breakpoints and count the number of hits), Python (to generate the input) and bash (to tie everything together). The entire code for reproducing these results is here.

Comparison with Mergesort

- First, Quicksort does all of its work in the divide (partition) step. The conquer step is trivial, since after recursion is complete, the array is completely sorted. In contrast, Mergesort does very little work in the divide step, and does most of its work after the recursion is complete.

- Second, the algorithms have different computational complexity: Mergesort is consistent $O(N \log N)$, Quicksort is $O(N \log N)$, $O(N \log N)$ and $O(N^2)$ in the best, average and worst-case, respectively.

- Third, the algorithms have different space complexity: unlike Mergesort, Quicksort's partition step can be implemented in-place without significant impact on complexity.

- Fourth, unlike Mergesort, Quicksort is not a stable sorting algorithm, since the partition step reorders elements. Stable implementations of Quicksort do exist, but are not in-place.

- Finally, Mergesort is easier to parallelize than Quicksort, since the divide step is simpler with the former.

Conclusion

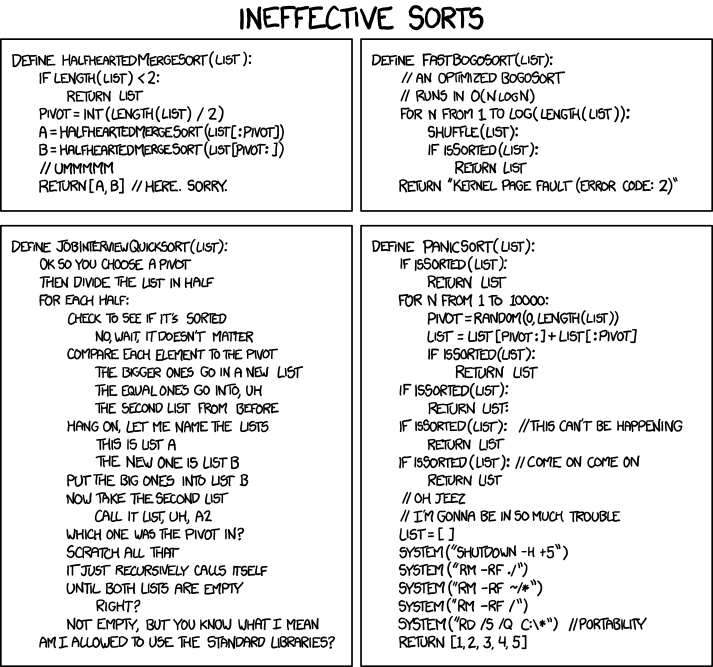

If you're one of the chosen few that managed to soldier on through the entire article, give yourself a pat on the back. Thanks for reading the entire thing. Please reward yourself with a refreshing chuckle at this sorting-related xkcd.com comic:

No comments:

Post a Comment